Quantum computing is a groundbreaking technology that harnesses the strange and powerful principles of quantum mechanics to process information in ways that traditional computers cannot. Unlike classical computers, which use bits to represent data as either 0 or 1, quantum computers employ quantum bits, or qubits, which can exist in multiple states simultaneously. This capability unlocks the potential to solve certain complex problems—like optimizing global supply chains or simulating chemical reactions—far more efficiently than classical systems. But why is quantum computing so different, and why has it only recently become feasible after decades of being a theoretical dream? This article delves into the essence of quantum computing, its differences from classical computing, its potential impact, the historical challenges that delayed its development, and the milestones that have brought us to today, July 18, 2025.

Layman’s Explanation: What is Quantum Computing?

Think of a classical computer as a chef following a recipe, measuring ingredients one at a time to cook a dish. The chef works step-by-step, and for a complicated dish with many ingredients, this can take a long time. Now, imagine a quantum computer as a chef with a magical kitchen, where they can mix multiple ingredients in countless combinations all at once, instantly figuring out the best recipe. This magic comes from qubits, which, unlike regular bits that are either 0 or 1, can be a blend of both due to a property called superposition. This lets quantum computers tackle many possibilities at the same time, like finding the fastest delivery route or designing a new material.

Quantum computers aren’t meant to replace your laptop or phone for everyday tasks like emailing or streaming videos. They’re built for specific, tough problems that classical computers struggle with, such as cracking encryption or modeling how molecules interact. Building quantum computers was nearly impossible until recently because qubits are incredibly sensitive—think of them as delicate bubbles that pop if disturbed by heat, noise, or even a stray photon. Only in the last few years have scientists figured out how to keep these bubbles stable long enough to compute, thanks to advances in technology and engineering.

Scientific Explanation: The Mechanics of Quantum Computing

Quantum computing is rooted in quantum mechanics, the physics governing particles at atomic and subatomic scales. Unlike classical bits, which are binary (0 or 1), quantum bits (qubits) are described by a quantum state in a complex vector space:

[ |\psi\rangle = \alpha|0\rangle + \beta|1\rangle ]

where (\alpha) and (\beta) are complex numbers satisfying (|\alpha|^2 + |\beta|^2 = 1). This superposition allows a qubit to represent multiple states simultaneously. Key quantum phenomena include:

- Entanglement: A non-classical correlation between qubits, where the state of one qubit is tied to another. For example, a two-qubit entangled state might be:

[ |\Phi\rangle = \frac{1}{\sqrt{2}}(|00\rangle + |11\rangle) ]

- Quantum Superposition: Enables parallel computation by encoding multiple states in a single qubit system.

- Measurement Collapse: Observing a qubit forces it into a definite state (|0⟩ or |1⟩), with probabilities determined by the amplitudes.

Quantum computers operate using quantum circuits, composed of gates (e.g., Hadamard, CNOT) that manipulate qubit states. These circuits implement quantum algorithms, such as:

- Shor’s Algorithm: Exponentially faster factoring of large numbers.

- Grover’s Algorithm: Quadratic speedup for unstructured search.

- Quantum Phase Estimation: Critical for applications like quantum chemistry simulations.

These algorithms exploit quantum parallelism and interference to achieve computational advantages over classical methods for specific problems.

Differences Between Quantum and Classical Computing

| Feature | Classical Computing | Quantum Computing |

|---|---|---|

| Data Unit | Bits (0 or 1). | Qubits (superposition of 0 and 1). |

| Computation | Sequential or parallel, using deterministic or probabilistic logic. | Parallel via superposition, probabilistic outcomes via measurement. |

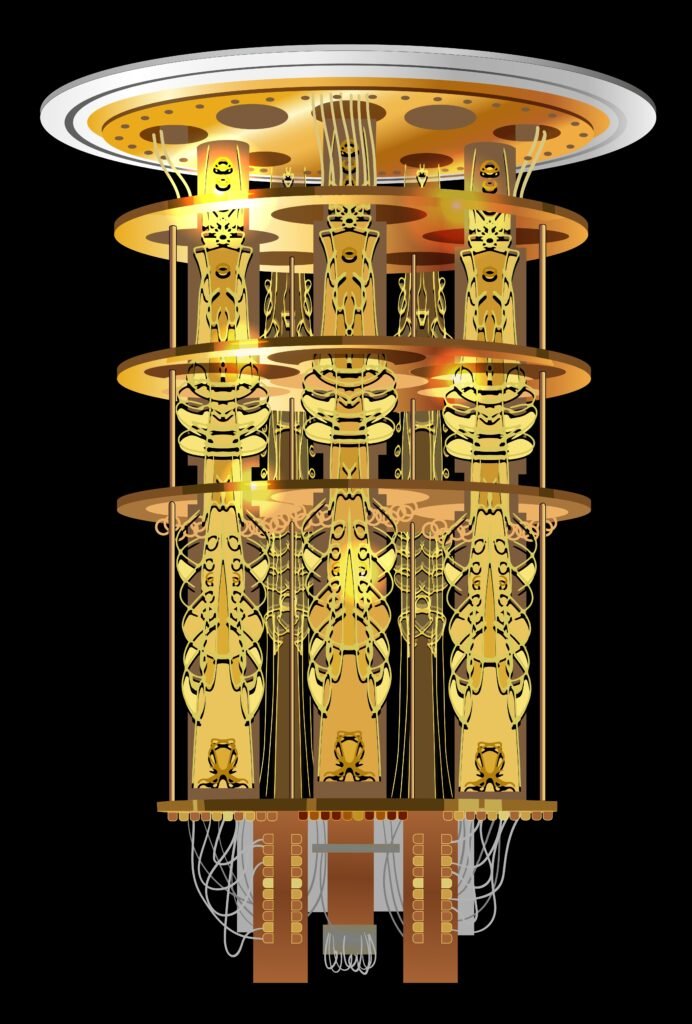

| Hardware | CPUs, GPUs, with silicon-based transistors. | Quantum processors (e.g., superconducting circuits, trapped ions). |

| Algorithms | Broadly applicable (e.g., sorting, rendering). | Specialized (e.g., quantum simulation, optimization). |

| Error Sensitivity | Low error rates, robust hardware. | High error rates, sensitive to environmental noise. |

| Applications | General-purpose: apps, databases, gaming. | Niche: cryptography, molecular modeling, complex optimization. |

Why Quantum Computing is Different

- Parallelism: Superposition allows quantum computers to evaluate multiple solutions simultaneously, unlike classical computers’ linear or parallel processing.

- Algorithmic Advantage: Quantum algorithms can solve certain problems (e.g., factoring, quantum simulation) exponentially or quadratically faster than classical algorithms.

- Problem Scope: Quantum computers are not general-purpose; they excel at specific tasks like quantum chemistry or combinatorial optimization, while classical computers handle everyday computing needs.

- Hardware Paradigm: Quantum systems require entirely different physical principles (e.g., quantum tunneling, superposition) compared to classical transistor-based systems.

Implications for Classical Computers

Quantum computing complements rather than replaces classical computing:

- Specialized Use Cases: Quantum computers target problems where classical systems are inefficient, such as simulating quantum systems or solving NP-hard optimization problems. Classical computers remain ideal for general tasks like running operating systems or managing databases.

- Hybrid Computing: Many quantum applications, like the Quantum Approximate Optimization Algorithm (QAOA), rely on classical computers for pre- and post-processing, creating a symbiotic relationship.

- Cryptographic Disruption: Shor’s algorithm could break widely used encryption (e.g., RSA), pushing the development of quantum-resistant cryptography.

- Industry Impact: Quantum computing could accelerate innovation in fields like pharmaceuticals, logistics, and artificial intelligence, but classical computers will continue to dominate most computational infrastructure.

Why Was Quantum Computing Impossible Until Recently?

Quantum computing faced significant scientific and engineering hurdles that delayed its realization:

- Decoherence:

- Challenge: Qubits lose their quantum state (decohere) due to interactions with the environment (e.g., thermal noise, electromagnetic fields).

- Solution: Cryogenic systems (operating at ~10–20 mK) and improved isolation techniques extend coherence times to microseconds or milliseconds.

- High Error Rates:

- Challenge: Quantum gates and measurements have error rates of 0.1–1%, compared to classical error rates of 10^-15.

- Solution: Quantum error correction (e.g., surface codes) and fault-tolerant designs, though these demand significant qubit overhead.

- Qubit Scalability:

- Challenge: Increasing qubit count while maintaining low noise and precise control is complex, as qubits must interact without crosstalk.

- Solution: Modular architectures and advances in qubit types (superconducting, trapped ions, photonics).

- Precise Control:

- Challenge: Qubits require exact manipulation via lasers, microwaves, or magnetic fields, and measurements must preserve quantum information.

- Solution: High-fidelity control systems and non-destructive readout techniques.

- Materials and Fabrication:

- Challenge: Quantum hardware demands ultra-pure materials and extreme conditions (e.g., high vacuum, low temperatures).

- Solution: Progress in nanotechnology, superconducting circuits, and cryogenic engineering.

History of Quantum Computing

The journey from theory to reality spans over four decades, marked by theoretical insights, experimental milestones, and technological breakthroughs.

1980s: Conceptual Beginnings

- 1980: Paul Benioff proposed a quantum model of computation, showing that quantum systems could theoretically compute.

- 1982: Richard Feynman argued that quantum computers could efficiently simulate quantum systems, a task infeasible for classical computers.

- 1985: David Deutsch introduced the quantum Turing machine, laying the theoretical foundation for universal quantum computing.

1990s: Algorithmic Breakthroughs

- 1994: Peter Shor developed Shor’s algorithm, demonstrating exponential speedup for factoring large numbers, which galvanized interest due to its cryptographic implications.

- 1995: Lov Grover’s algorithm offered a quadratic speedup for unstructured search, broadening quantum computing’s potential.

- 1998: First experimental demonstrations using nuclear magnetic resonance (NMR) systems with 2–3 qubits, though not scalable.

2000s: Early Hardware and Error Correction

- 2001: IBM implemented Shor’s algorithm on a 7-qubit NMR system, factoring 15 into 3 and 5, a proof-of-concept milestone.

- 2000s: Development of scalable qubit platforms, including superconducting qubits (e.g., Yale, IBM) and trapped ions (e.g., NIST, University of Innsbruck).

- 2007: Proposals for quantum error correction codes, like the surface code, to address noise in quantum systems.

2010s: Rise of the NISQ Era

- 2011: D-Wave Systems launched the D-Wave One, a quantum annealer for optimization, though limited to specific tasks.

- 2016: IBM’s Quantum Experience provided public cloud access to a 5-qubit quantum computer, democratizing research.

- 2019: Google announced quantum supremacy with its 53-qubit Sycamore processor, solving a contrived problem faster than a classical supercomputer, though debates persisted about practical relevance.

2020s: Scaling and Practical Applications

- 2020–2023: Companies like IBM (127-qubit Eagle, 433-qubit Osprey), IonQ, and Rigetti built NISQ systems with 50–500 qubits, still limited by noise.

- 2024: Advances in error mitigation and hybrid quantum-classical algorithms enabled early applications in quantum chemistry and optimization.

- 2025 (Current): Quantum computing remains in the Noisy Intermediate-Scale Quantum (NISQ) era, with systems up to ~1000 qubits. Practical quantum advantage is anticipated in the coming years.

Why Quantum Computing is Now Feasible

Recent advances have overcome longstanding barriers:

- Qubit Technologies: Superconducting qubits (IBM, Google), trapped ions (IonQ), and photonic systems (PsiQuantum) offer improved coherence and scalability.

- Error Mitigation: Techniques like error suppression and dynamical decoupling complement error correction, enabling NISQ applications.

- Cloud Platforms: IBM Quantum, Amazon Braket, and Microsoft Azure Quantum provide access to quantum hardware, fostering global research.

- Funding and Collaboration: Governments (e.g., U.S. Quantum Initiative, EU Quantum Flagship) and companies (e.g., Google, Microsoft) invest billions in quantum research.

- Software Development: Tools like Qiskit, Cirq, and Q# simplify quantum programming, bridging theory and practice.

Current State and Future Outlook (July 18, 2025)

As of July 18, 2025, quantum computing is in the NISQ era, with systems offering 50–1000 qubits but limited by noise. Key developments include:

- Applications: Prototypes in drug discovery (e.g., Merck), financial modeling (e.g., Goldman Sachs), and logistics optimization (e.g., DHL).

- Hardware Goals: IBM targets 1000+ qubits by 2026–2028, with fault-tolerant systems projected for the 2030s.

- Challenges: Achieving fault tolerance, scaling qubits, and reducing error rates remain critical hurdles.

Future Outlook:

- Near-term (5–10 years): Hybrid quantum-classical systems will enable practical applications in optimization, machine learning, and small-scale quantum simulations.

- Long-term (10–20 years): Fault-tolerant quantum computers could disrupt fields like cryptography, materials science, and artificial intelligence, coexisting with classical systems for specialized tasks.

Conclusion

Quantum computing is a transformative technology that uses quantum mechanics to process information, offering potential speedups for problems like factoring, quantum simulation, and optimization. Unlike classical computers, which rely on binary bits and sequential logic, quantum computers leverage superposition and entanglement for parallel computation, making them fundamentally different. Historically deemed impossible due to decoherence, high error rates, and engineering challenges, quantum computing has become feasible through decades of theoretical and experimental progress, from Feynman’s 1982 vision to today’s NISQ systems. While classical computers will remain essential for general computing, quantum computers promise to revolutionize specific domains, heralding a future where both technologies work in tandem to address humanity’s greatest challenges.

Leave a Reply